6.2_Robbins-Monro_algorithm

6.2 Robbins-Monro algorithm

Stochastic approximation refers to a broad class of stochastic iterative algorithms for solving root-finding or optimization problems [24]. Compared to many other root-finding

algorithms such as gradient-based ones, stochastic approximation is powerful in the sense that it does not require the expression of the objective function or its derivative.

The Robbins-Monro (RM) algorithm is a pioneering work in the field of stochastic approximation [24-27]. The famous stochastic gradient descent algorithm is a special form of the RM algorithm, as shown in Section 6.4. We next introduce the details of the RM algorithm.

Suppose that we would like to find the root of the equation

where is the unknown variable and is a function. Many problems can be formulated as root-finding problems. For example, if is an objective function to be optimized, this optimization problem can be converted to solving . In addition, an equation such as , where is a constant, can also be converted to the above equation by rewriting as a new function.

If the expression of or its derivative is known, there are many numerical algorithms that can be used. However, the problem we are facing is that the expression of the function is unknown. For example, the function may be represented by an artificial neural network whose structure and parameters are unknown. Moreover, we can only obtain a noisy observation of :

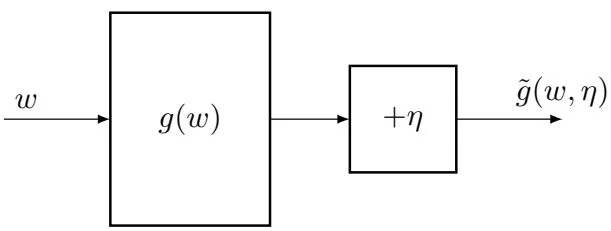

where is the observation error, which may or may not be Gaussian. In summary, it is a black-box system where only the input and the noisy output are known (see Figure 6.2). Our aim is to solve using and .

Figure 6.2: An illustration of the problem of solving from and .

The RM algorithm that can solve is

where is the th estimate of the root, is the th noisy observation, and is a positive coefficient. As can be seen, the RM algorithm does not require any information about the function. It only requires the input and output.

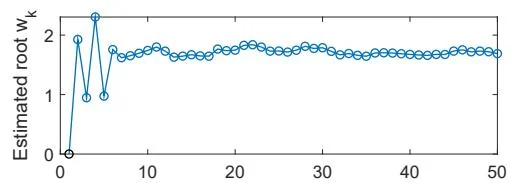

Figure 6.3: An illustrative example of the RM algorithm.

To illustrate the RM algorithm, consider an example in which . The true root is . Now, suppose that we can only observe the input and the output , where is i.i.d. and obeys a standard normal distribution with a zero mean and a standard deviation of 1. The initial guess is , and the coefficient is . The evolution process of is shown in Figure 6.3. Even though the observation is corrupted by noise , the estimate can still converge to the true root. Note that the initial guess must be properly selected to ensure convergence for the specific function of . In the following subsection, we present the conditions under which the RM algorithm converges for any initial guesses.

6.2.1 Convergence properties

Why can the RM algorithm in (6.5) find the root of ? We next illustrate the idea with an example and then provide a rigorous convergence analysis.

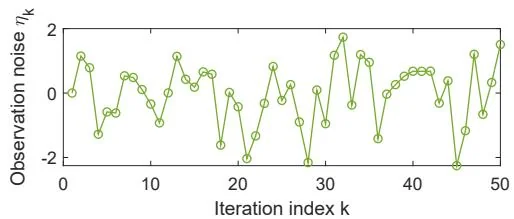

Consider the example shown in Figure 6.4. In this example, . The true root of is . We apply the RM algorithm with and . To better illustrate the reason for convergence, we simply set , and consequently, . The RM algorithm in this case is . The resulting generated by the RM algorithm is shown in Figure 6.4. It can be seen that converges to the true root .

This simple example can illustrate why the RM algorithm converges.

When , we have . Then, . If is sufficiently small, we have . As a result, is closer to than .

When , we have . Then, . If is sufficiently small, we have . As a result, is closer to than .

In either case, is closer to than . Therefore, it is intuitive that converges to .

Figure 6.4: An example for illustrating the convergence of the RM algorithm.

The above example is simple since the observation error is assumed to be zero. It would be nontrivial to analyze the convergence in the presence of stochastic observation errors. A rigorous convergence result is given below.

Theorem 6.1 (Robbins-Monro theorem). In the Robbins-Monro algorithm in (6.5), if

(a) for all ;

(b) and ;

(c) and ;

where , then almost surely converges to the root satisfying .

We postpone the proof of this theorem to Section 6.3.3. This theorem relies on the notion of almost sure convergence, which is introduced in Appendix B.

The three conditions in Theorem 6.1 are explained as follows.

In the first condition, indicates that is a monotonically increasing function. This condition ensures that the root of exists and is unique. If is monotonically decreasing, we can simply treat as a new function that is monotonically increasing.

As an application, we can formulate an optimization problem in which the objective function is as a root-finding problem: . In this case, the condition that is monotonically increasing indicates that is convex, which is a commonly adopted assumption in optimization problems.

The inequality indicates that the gradient of is bounded from above. For example, satisfies this condition, but does not.

The second condition about is interesting. We often see conditions like this in reinforcement learning algorithms. In particular, the condition means that is bounded from above. It requires that converges to zero as . The condition means that is infinitely large. It requires that should not converge to zero too fast. These conditions have interesting properties, which will be analyzed in detail shortly.

The third condition is mild. It does not require the observation error to be Gaussian. An important special case is that is an i.i.d. stochastic sequence satisfying and . In this case, the third condition is valid because is independent of and hence we have and .

We next examine the second condition about the coefficients more closely.

Why is the second condition important for the convergence of the RM algorithm?

This question can naturally be answered when we present a rigorous proof of the above theorem later. Here, we would like to provide some insightful intuition.

First, indicates that as . Why is this condition important? Suppose that the observation is always bounded. Since

if , then and hence , indicating that and approach each other when . Otherwise, if does not converge, then may still fluctuate when .

Second, indicates that should not converge to zero too fast. Why is this condition important? Summarizing both sides of the equations of , , , ... gives

If , then is also bounded. Let denote the finite upper bound such that

If the initial guess is selected far away from so that , then it is impossible to have according to (6.6). This suggests that the RM algorithm cannot find the true solution in this case. Therefore, the condition is necessary to ensure convergence given an arbitrary initial guess.

What kinds of sequences satisfy and ?

One typical sequence is

On the one hand, it holds that

where is called the Euler-Mascheroni constant (or Euler's constant) [28]. Since as , we have

In fact, is called the harmonic number in number theory [29]. On the other hand, it holds that

Finding the value of is known as the Basel problem [30].

In summary, the sequence satisfies the second condition in Theorem 6.1. Notably, a slight modification, such as or where is bounded, also preserves this condition.

In the RM algorithm, is often selected as a sufficiently small constant in many applications. Although the second condition is not satisfied anymore in this case because rather than , the algorithm can still converge in a certain sense [24, Section 1.5]. In addition, in the example shown in Figure 6.3 does not satisfy the first condition, but the RM algorithm can still find the root if the initial guess is adequately (not arbitrarily) selected.

6.2.2 Application to mean estimation

We next apply the Robbins-Monro theorem to analyze the mean estimation problem, which has been discussed in Section 6.1. Recall that

is the mean estimation algorithm in (6.4). When , we can obtain the analytical expression of as . However, we would not be able to obtain an analytical expression when given general values of . In this case, the convergence analysis is nontrivial. We can show that the algorithm in this case is a special RM

algorithm and hence its convergence naturally follows.

In particular, define a function as

The original problem is to obtain the value of . This problem is formulated as a root-finding problem to solve . Given a value of , the noisy observation that we can obtain is , where is a sample of . Note that can be written as

where

The RM algorithm for solving this problem is

which is exactly the algorithm in (6.4). As a result, it is guaranteed by Theorem 6.1 that converges to almost surely if , , and is i.i.d. It is worth mentioning that the convergence property does not rely on any assumption regarding the distribution of .