2.2_Integrated_GPUs

2.2 Integrated GPUs

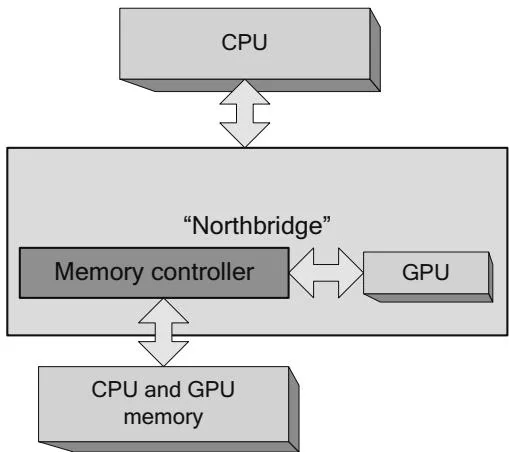

Here, the term integrated means "integrated into the chipset." As Figure 2.8 shows, the memory pool that previously belonged only to the CPU is now shared between the CPU and the GPU that is integrated into the chipset. Examples of NVIDIA chipsets with CUDA-capable GPUs include the MCP79 (for laptops and netbooks) and MCP89. MCP89 is the last and greatest CUDA-capable x86 chipset that NVIDIA will manufacture; besides an integrated L3 cache, it has 3x as many SMs as the MCP7x chipsets.

Figure 2.8 Integrated GPU.

CUDA's APIs for mapped pinned memory have special meaning on integrated GPUs. These APIs, which map host memory allocations into the address space of CUDA kernels so they can be accessed directly, also are known as "zero-copy," because the memory is shared and need not be copied over the bus. In fact, for transfer-bound workloads, an integrated GPU can outperform a much larger discrete GPU.

"Write-combined" memory allocations also have significance on integrated GPUs; cache snoops to the CPU are inhibited on this memory, which increases GPU performance when accessing the memory. Of course, if the CPU reads from the memory, the usual performance penalties for WC memory apply.

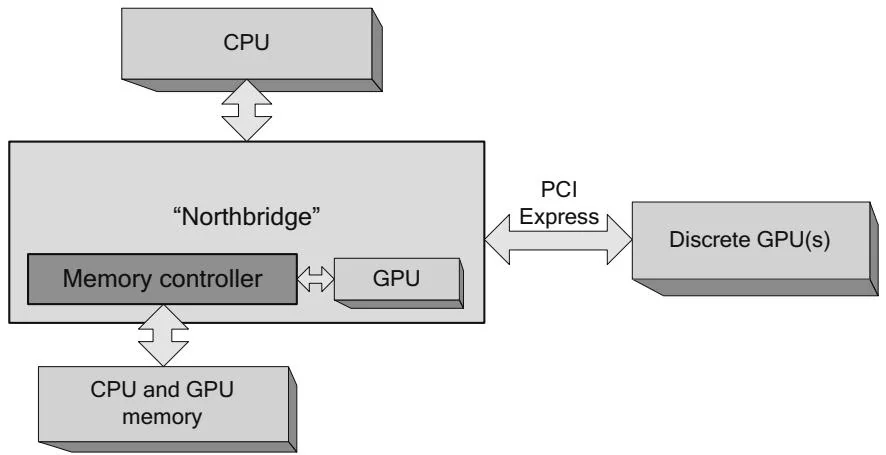

Integrated GPUs are not mutually exclusive with discrete ones; the MCP7x and MCP89 chipsets provide for PCI Express connections (Figure 2.9). On such systems, CUDA prefers to run on the discrete GPU(s) because most CUDA applications are authored with them in mind. For example, a CUDA application designed to run on a single GPU will automatically select the discrete one.

CUDA applications can query whether a GPU is integrated by examining CUDADeviceProp.integrated or by passing CU_DEVICE_ATTRIBUTE_INTEGRATED to cuDeviceGetAttribute().

For CUDA, integrated GPUs are not exactly a rarity; millions of computers have integrated, CUDA-capable GPUs on board, but they are something of a curiosity, and in a few years, they will be an anachronism because NVIDIA has exited the

Figure 2.9 Integrated GPU with discrete GPU(s).

x86 chipset business. That said, NVIDIA has announced its intention to ship systems on a chip (SOCs) that integrate CUDA-capable GPUs with ARM CPUs, and it is a safe bet that zero-copy optimizations will work well on those systems.