3.5_Factors_that_influence_optimal_policies

3.5 Factors that influence optimal policies

The BOE is a powerful tool for analyzing optimal policies. We next apply the BOE to study what factors can influence optimal policies. This question can be easily answered by observing the elementwise expression of the BOE:

The optimal state value and optimal policy are determined by the following parameters: 1) the immediate reward , 2) the discount rate , and 3) the system model . While the system model is fixed, we next discuss how the optimal policy varies when we change the values of and . All the optimal policies presented in this section can be obtained via the algorithm in Theorem 3.3. The implementation details of the algorithm will be given in Chapter 4. The present chapter mainly focuses on the fundamental properties of optimal policies.

A baseline example

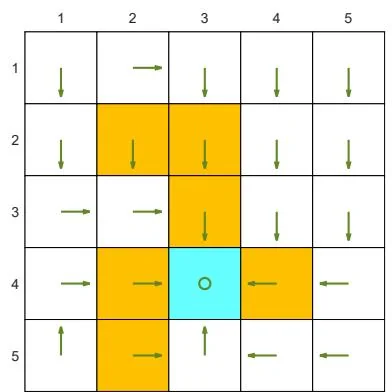

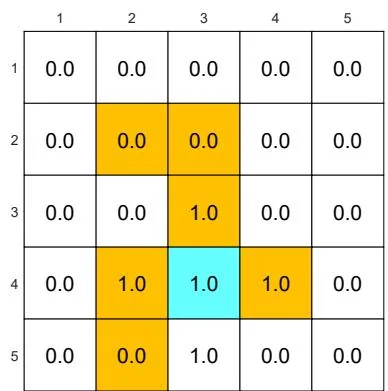

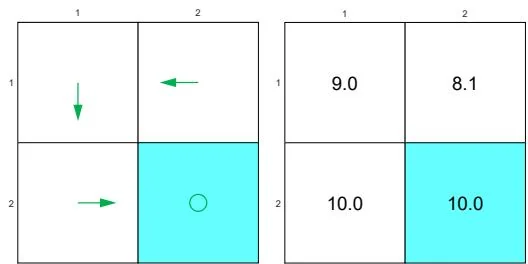

Consider the example in Figure 3.4. The reward settings are and . In addition, the agent receives a reward of for every movement step. The discount rate is selected as .

With the above parameters, the optimal policy and optimal state values are given in Figure 3.4(a). It is interesting that the agent is not afraid of passing through forbidden areas to reach the target area. More specifically, starting from the state at column ), the agent has two options for reaching the target area. The first option is to avoid all the forbidden areas and travel a long distance to the target area. The second option is to pass through forbidden areas. Although the agent obtains negative rewards when entering forbidden areas, the cumulative reward of the second trajectory is greater than that of the first trajectory. Therefore, the optimal policy is far-sighted due to the relatively large value of

Impact of the discount rate

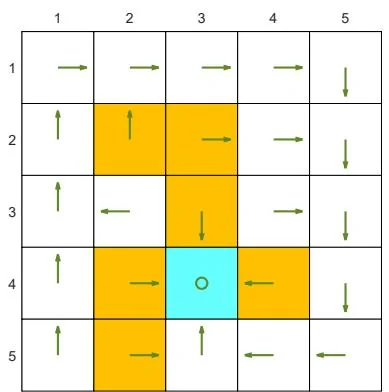

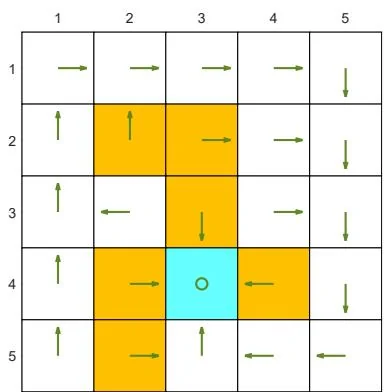

If we change the discount rate from to and keep other parameters unchanged, the optimal policy becomes the one shown in Figure 3.4(b). It is interesting that the agent does not dare to take risks anymore. Instead, it would travel a long distance to reach the target while avoiding all the forbidden areas. This is because the optimal policy becomes short-sighted due to the relatively small value of .

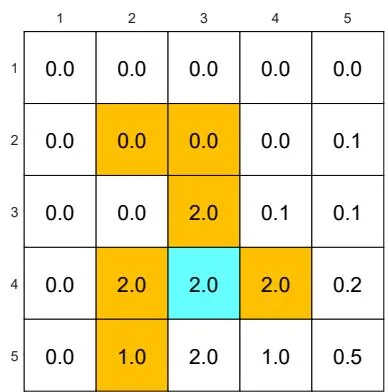

In the extreme case where , the corresponding optimal policy is shown in Figure 3.4(c). In this case, the agent is not able to reach the target area. This is

(a) Baseline example: , ,

(b) The discount rate is changed to . The other parameters are the same as those in (a).

(c) The discount rate is changed to . The other parameters are the same as those in (a).

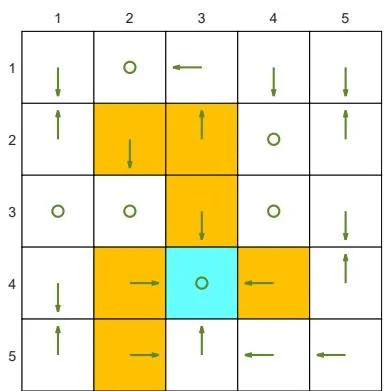

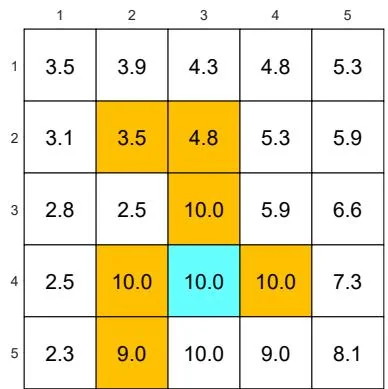

(d) is changed from to . The other parameters are the same as those in (a).

Figure 3.4: The optimal policies and optimal state values given different parameter values.

because the optimal policy for each state is extremely short-sighted and merely selects the action with the greatest immediate reward instead of the greatest total reward.

In addition, the spatial distribution of the state values exhibits an interesting pattern: the states close to the target have greater state values, whereas those far away have lower values. This pattern can be observed from all the examples shown in Figure 3.4. It can be explained by using the discount rate: if a state must travel along a longer trajectory to reach the target, its state value is smaller due to the discount rate.

Impact of the reward values

If we want to strictly prohibit the agent from entering any forbidden area, we can increase the punishment received for doing so. For instance, if is changed from -1 to -10, the resulting optimal policy can avoid all the forbidden areas (see Figure 3.4(d)).

However, changing the rewards does not always lead to different optimal policies. One important fact is that optimal policies are invariant to affine transformations of the rewards. In other words, if we scale all the rewards or add the same value to all the rewards, the optimal policy remains the same.

Theorem 3.6 (Optimal policy invariance). Consider a Markov decision process with as the optimal state value satisfying . If every reward is changed by an affine transformation to , where and , then the corresponding optimal state value is also an affine transformation of :

where is the discount rate and . Consequently, the optimal policy derived from is invariant to the affine transformation of the reward values.

Box 3.5: Proof of Theorem 3.6

For any policy , define where

If , then and hence , where . In this case, the BOE becomes

We next solve the new BOE in (3.9) by showing that with is a solution of (3.9). In particular, substituting into (3.9) gives

where the last equality is due to the fact that . The above equation can be reorganized as

which is equivalent to

Since , the above equation is valid and hence is the solution of (3.9). Since (3.9) is the BOE, is also the unique solution. Finally, since is an affine transformation of , the relative relationships between the action values remain the same. Hence, the greedy optimal policy derived from is the same as that from : is the same as .

Readers may refer to [9] for a further discussion on the conditions under which modifications to the reward values preserve the optimal policy.

Avoiding meaningless detours

In the reward setting, the agent receives a reward of for every movement step (unless it enters a forbidden area or the target area or attempts to go beyond the boundary). Since a zero reward is not a punishment, would the optimal policy take meaningless detours before reaching the target? Should we set to be negative to encourage the agent to reach the target as quickly as possible?

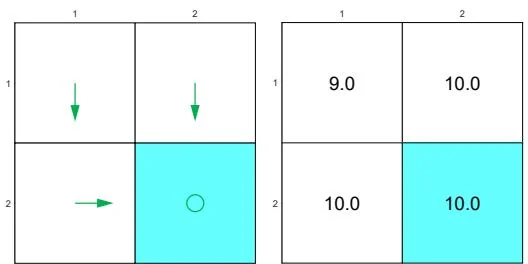

(a) Optimal policy

(b) Non-optimal policy

Figure 3.5: Examples illustrating that optimal policies do not take meaningless detours due to the discount rate.

Consider the examples in Figure 3.5, where the bottom-right cell is the target area

to reach. The two policies here are the same except for state . By the policy in Figure 3.5(a), the agent moves downward at and the resulting trajectory is . By the policy in Figure 3.5(b), the agent moves leftward and the resulting trajectory is .

It is notable that the second policy takes a detour before reaching the target area. If we merely consider the immediate rewards, taking this detour does not matter because no negative immediate rewards will be obtained. However, if we consider the discounted return, then this detour matters. In particular, for the first policy, the discounted return is

As a comparison, the discounted return for the second policy is

It is clear that the shorter the trajectory is, the greater the return is. Therefore, although the immediate reward of every step does not encourage the agent to approach the target as quickly as possible, the discount rate does encourage it to do so.

A misunderstanding that beginners may have is that adding a negative reward (e.g., -1) on top of the rewards obtained for every movement is necessary to encourage the agent to reach the target as quickly as possible. This is a misunderstanding because adding the same reward on top of all rewards is an affine transformation, which preserves the optimal policy. Moreover, optimal policies do not take meaningless detours due to the discount rate, even though a detour may not receive any immediate negative rewards.