9.6_Q&A

9.6 Q&A

Q: What is the basic idea of the policy gradient method?

A: The basic idea is simple. That is to define an appropriate scalar metric, derive its gradient, and then use gradient-ascent methods to optimize the metric. The most important theoretical result regarding this method is the policy gradient given in Theorem 9.1.

Q: What is the most complicated part of the policy gradient method?

A: The basic idea of the policy gradient method is simple. However, the derivation procedure of the gradients is quite complicated. That is because we have to distinguish numerous different scenarios. The mathematical derivation procedure in each scenario is nontrivial. It is sufficient for many readers to be familiar with the result in Theorem 9.1 without knowing the proof.

Q: What metrics should be used in the policy gradient method?

A: We introduced three common metrics in this chapter: , , and . Since they all lead to similar policy gradients, they all can be adopted in the policy gradient method. More importantly, the expressions in (9.1) and (9.4) are often encountered in the literature.

Q: Why is a natural logarithm function contained in the policy gradient?

A: A natural logarithm function is introduced to express the gradient as an expected value. In this way, we can approximate the true gradient with a stochastic one.

Q: Why do we need to study undiscounted cases when deriving the policy gradient?

A: The definition of the average reward is valid for both discounted and undiscounted cases. While the gradient of in the discounted case is an approximation, its gradient in the undiscounted case is more elegant.

Q: What does the policy gradient algorithm in (9.32) do mathematically?

A: To better understand this algorithm, readers are recommended to examine its concise expression in (9.33), which clearly shows that it is a gradient-ascent algorithm for updating the value of . That is, when a sample is available, the policy can be updated so that or depending on the coefficients.

Chapter 10

Actor-Critic Methods

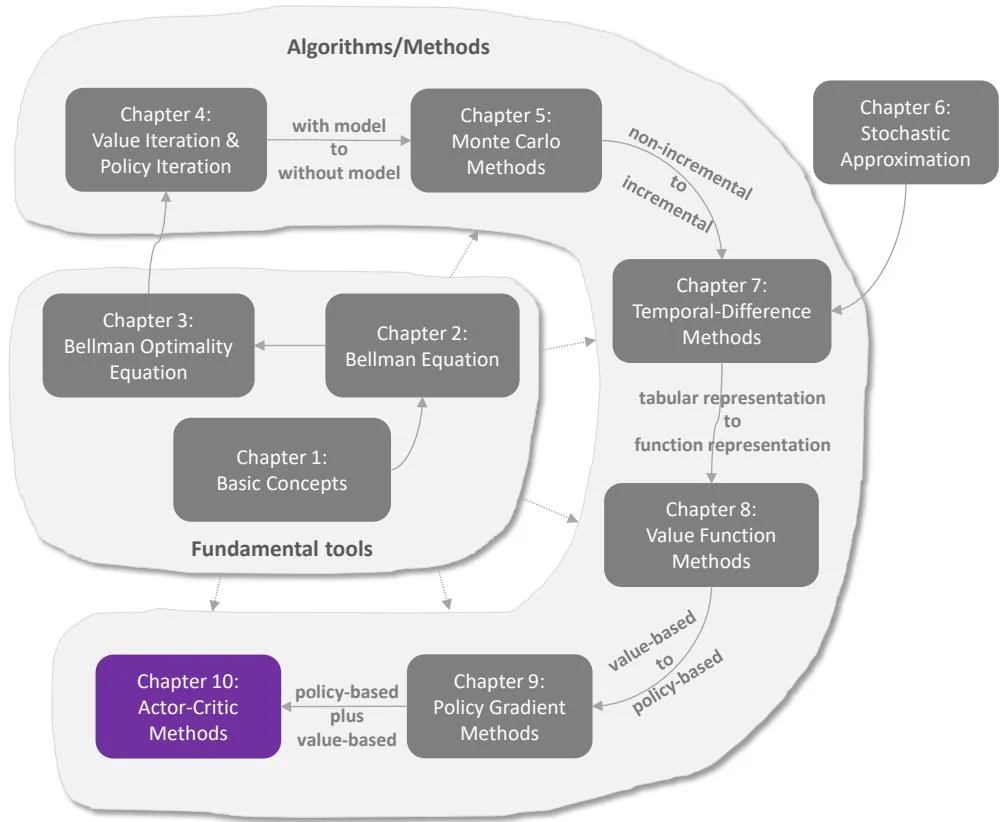

Figure 10.1: Where we are in this book.

This chapter introduces actor-critic methods. From one point of view, "actor-critic" refers to a structure that incorporates both policy-based and value-based methods. Here, an "actor" refers to a policy update step. The reason that it is called an actor is that the actions are taken by following the policy. Here, an "critic" refers to a value update step. It is called a critic because it criticizes the actor by evaluating its corresponding values. From another point of view, actor-critic methods are still policy gradient algorithms. They can be obtained by extending the policy gradient algorithm introduced in Chapter 9. It is important for the reader to well understand the contents of Chapters 8 and 9 before studying this chapter.