README

Chapter 15: Inter-Agent Communication (A2A)

Individual AI agents often face limitations when tackling complex, multifaceted problems, even with advanced capabilities. To overcome this, Inter-Agent Communication (A2A) enables diverse AI agents, potentially built with different frameworks, to collaborate effectively. This collaboration involves seamless coordination, task delegation, and information exchange.

Google's A2A protocol is an open standard designed to facilitate this universal communication. This chapter will explore A2A, its practical applications, and its implementation within the Google ADK.

Inter-Agent Communication Pattern Overview

The Agent2Agent (A2A) protocol is an open standard designed to enable communication and collaboration between different AI agent frameworks. It ensures interoperability, allowing AI agents developed with technologies like LangGraph, CrewAI, or Google ADK to work together regardless of their origin or framework differences.

A2A is supported by a range of technology companies and service providers, including Atlassian, Box, LangChain, MongoDB, Salesforce, SAP, and ServiceNow. Microsoft plans to integrate A2A into Azure AI Foundry and Copilot Studio, demonstrating its commitment to open protocols. Additionally, AuthO and SAP are integrating A2A support into their platforms and agents.

As an open-source protocol, A2A welcomes community contributions to facilitate its evolution and widespread adoption.

Core Concepts of A2A

The A2A protocol provides a structured approach for agent interactions, built upon several core concepts. A thorough grasp of these concepts is crucial for anyone developing or integrating with A2A-compliant systems. The foundational pillars of A2A include Core Actors, Agent Card, Agent Discovery, Communication and Tasks, Interaction mechanisms, and Security, all of which will be reviewed in detail.

Core Actors: A2A involves three main entities:

User: Initiates requests for agent assistance.

A2A Client (Client Agent): An application or AI agent that acts on the user's behalf to request actions or information.

A2A Server (Remote Agent): An AI agent or system that provides an HTTP endpoint to process client requests and return results. The remote agent operates as an "opaque" system, meaning the client does not need to understand its internal operational details.

Agent Card: An agent's digital identity is defined by its Agent Card, usually a JSON file. This file contains key information for client interaction and automatic discovery, including the agent's identity, endpoint URL, and version. It also details supported capabilities like streaming or push notifications, specific skills, default input/output modes, and authentication requirements. Below is an example of an Agent Card for a WeatherBot.

{ "name": "WeatherBot", "description": "Provides accurate weather forecasts and historical data.", "url": "http://weather-service.example.com/a2a", "version": "1.0.0", "capabilities": { "streaming": true, "pushNotifications": false, "stateTransitionHistory": true }, "authentication": { "schemes": [ "apiKey" ] }, "defaultInputModes": [ "text" ], "defaultOutputModes": [ "text" ], "skills": [ { "id": "get_current/weather","name": "Get Current Weather", "description": "Retrieve real-time weather for any location.", "inputModes": [ "text"] , "outputModes": [ "text"] , "examples": [ "What's the weather in Paris?", "Current conditions in Tokyo"] , "tags": [ "weather", "current", "real-time"] }, { "id": "get_forecast", "name": "Get Forecast", "description": "Get 5-day weather predictions.", "inputModes": [ "text"] , "outputModes": [ "text"] , "examples": [ "5-day forecast for New York", "Will it rain in London this weekend?" ], "tags": [ "weather", "forecast", "prediction"] } ]Agent discovery: it allows clients to find Agent Cards, which describe the capabilities of available A2A Servers. Several strategies exist for this process:

Well-Knownd URI: Agents host their Agent Card at a standardized path (e.g.,

./well-known/agent.json). This approach offers broad, often automated, accessibility for public or domain-specific use.

Curated Registries: These provide a centralized catalog where Agent Cards are published and can be queried based on specific criteria. This is well-suited for enterprise environments needing centralized management and access control.

Direct Configuration: Agent Card information is embedded or privately shared. This method is appropriate for closely coupled or private systems where dynamic discovery isn't crucial.

Regardless of the chosen method, it is important to secure Agent Card endpoints. This can be achieved through access control, mutual TLS (mTLS), or network restrictions, especially if the card contains sensitive (though non-secret) information.

Communications and Tasks: In the A2A framework, communication is structured around asynchronous tasks, which represent the fundamental units of work for long-running processes. Each task is assigned a unique identifier and moves through a series of states—such as submitted, working, or completed—a design that supports parallel processing in complex operations. Communication between agents occurs through a Message.

This communication contains attributes, which are key-value metadata describing the message (like its priority or creation time), and one or more parts, which carry the actual content being delivered, such as plain text, files, or structured JSON data. The tangible outputs generated by an agent during a task are called artifacts. Like messages, artifacts are also composed of one or more parts and can be streamed incrementally as results become available. All communication within the A2A framework is conducted over HTTP(S) using the JSON-RPC 2.0 protocol for payloads. To maintain continuity across multiple interactions, a server-generated contextId is used to group related tasks and preserve context.

Interaction Mechanisms: Request/Response (Polling) Server-Sent Events (SSE). A2A provides multiple interaction methods to suit a variety of AI application needs, each with a distinct mechanism:

Synchronous Request/Response: For quick, immediate operations. In this model, the client sends a request and actively waits for the server to process it and return a complete response in a single, synchronous exchange.

Asynchronous Polling: Suited for tasks that take longer to process. The client sends a request, and the server immediately acknowledges it with a "working" status and a task ID. The client is then free to perform other actions and can

periodically poll the server by sending new requests to check the status of the task until it is marked as "completed" or "failed."

Streaming Updates (Server-Sent Events - SSE): Ideal for receiving real-time, incremental results. This method establishes a persistent, one-way connection from the server to the client. It allows the remote agent to continuously push updates, such as status changes or partial results, without the client needing to make multiple requests.

Push Notifications (Webhooks): Designed for very long-running or resource-intensive tasks where maintaining a constant connection or frequent polling is inefficient. The client can register a webhook URL, and the server will send an asynchronous notification (a "push") to that URL when the task's status changes significantly (e.g., upon completion).

The Agent Card specifies whether an agent supports streaming or push notification capabilities. Furthermore, A2A is modality-agnostic, meaning it can facilitate these interaction patterns not just for text, but also for other data types like audio and video, enabling rich, multimodal AI applications. Both streaming and push notification capabilities are specified within the Agent Card.

Synchronous Request Example

{

"jsonrpc": "2.0",

"id": "1",

"method": "sendTask",

"params": {

"id": "task-001",

"sessionId": "session-001",

"message": {

"role": "user",

"parts": [

"type": "text",

"text": "What is the exchange rate from USD to EUR?"

]

},

"acceptedOutputModes": ["text/plain"]

"historyLength": 5

}The synchronous request uses the sendTask method, where the client asks for and expects a single, complete answer to its query. In contrast, the streaming request uses the sendTaskSubscribe method to establish a persistent connection, allowing the agent to send back multiple, incremental updates or partial results over time.

# Streaming Request Example

{

"jsonrpc": "2.0",

"id": "2",

"method": "sendTaskSubscribe",

"params": {

"id": "task-002",

"sessionId": "session-001",

"message": {

"role": "user",

"parts": [

"type": "text",

"text": "What's the exchange rate for JPY to GBP today?"

]

],

},

"acceptedOutputModes": ["text/plain'],

"historyLength": 5

}Security: Inter-Agent Communication (A2A): Inter-Agent Communication (A2A) is a vital component of system architecture, enabling secure and seamless data exchange among agents. It ensures robustness and integrity through several built-in mechanisms.

Mutual Transport Layer Security (TLS): Encrypted and authenticated connections are established to prevent unauthorized access and data interception, ensuring secure communication.

Comprehensive Audit Logs: All inter-agent communications are meticulously recorded, detailing information flow, involved agents, and actions. This audit trail is crucial for accountability, troubleshooting, and security analysis.

Agent Card Declaration: Authentication requirements are explicitly declared in the Agent Card, a configuration artifact outlining the agent's identity, capabilities, and security policies. This centralizes and simplifies authentication management.

Credential Handling: Agents typically authenticate using secure credentials like OAuth 2.0 tokens or API keys, passed via HTTP headers. This method prevents credential exposure in URLs or message bodies, enhancing overall security.

A2A vs. MCP

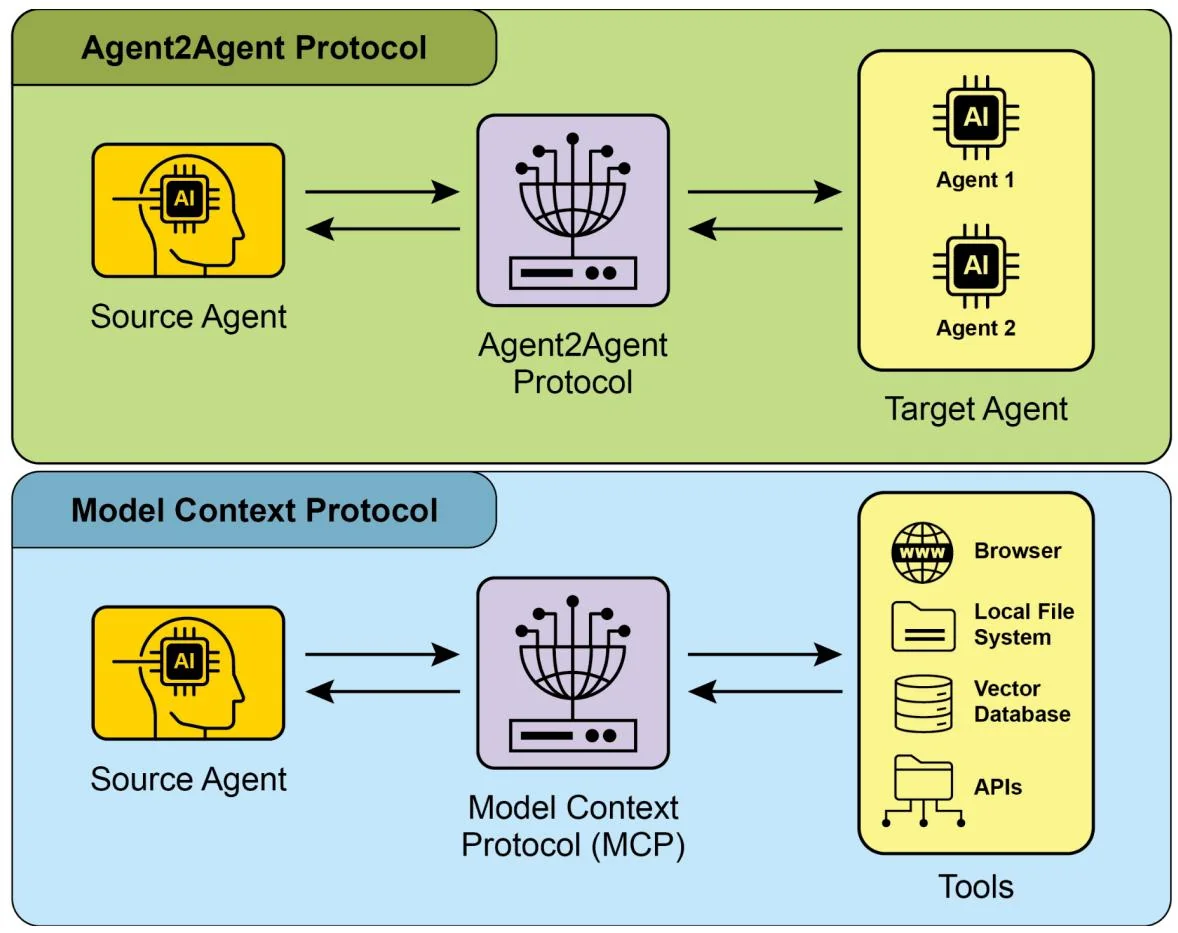

A2A is a protocol that complements Anthropic's Model Context Protocol (MCP) (see Fig. 1). While MCP focuses on structuring context for agents and their interaction with external data and tools, A2A facilitates coordination and communication among agents, enabling task delegation and collaboration.

Fig.1: Comparison A2A and MCP Protocols

The goal of A2A is to enhance efficiency, reduce integration costs, and foster innovation and interoperability in the development of complex, multi-agent AI

systems. Therefore, a thorough understanding of A2A's core components and operational methods is essential for its effective design, implementation, and application in building collaborative and interoperable AI agent systems..

Practical Applications & Use Cases

Inter-Agent Communication is indispensable for building sophisticated AI solutions across diverse domains, enabling modularity, scalability, and enhanced intelligence.

Multi-Framework Collaboration: A2A's primary use case is enabling independent AI agents, regardless of their underlying frameworks (e.g., ADK, LangChain, CrewAI), to communicate and collaborate. This is fundamental for building complex multi-agent systems where different agents specialize in different aspects of a problem.

Automated Workflow Orchestration: In enterprise settings, A2A can facilitate complex workflows by enabling agents to delegate and coordinate tasks. For instance, an agent might handle initial data collection, then delegate to another agent for analysis, and finally to a third for report generation, all communicating via the A2A protocol.

Dynamic Information Retrieval: Agents can communicate to retrieve and exchange real-time information. A primary agent might request live market data from a specialized "data fetching agent," which then uses external APIs to gather the information and send it back.

Hands-On Code Example

Let's examine the practical applications of the A2A protocol. The repository at https://github.com/google-a2a/a2a-samples/tree/main/samples provides examples in Java, Go, and Python that illustrate how various agent frameworks, such as LangGraph, CrewAI, Azure AI Foundry, and AG2, can communicate using A2A. All code in this repository is released under the Apache 2.0 license. To further illustrate A2A's core concepts, we will review code excerpts focusing on setting up an A2A Server using an ADK-based agent with Google-authenticated tools. Looking at https://github.com/google-a2a/a2a-samples/blob/main/samples/python/agents/birthday Planner_adk/calendar_agent/adk_agent.py

import datetime

from google.adkagents import LlmAgent # type: ignore[import-untyped]

from google.adk.tools.google_api_tool import CalendarToolset # type:ignore [import-untyped]

async def create_agent(client_id, client_secret) -> LlmAgent: ""Constructs the ADK agent.""" toolset $=$ CalendarToolset(client_id $\equiv$ client_id,

client_secret $\equiv$ client_secret) return LlmAgent( model $\coloneqq$ 'gemini-2.0-flash-001', name $\equiv$ 'calendar_agent', description $\equiv$ "An agent that can help manage a user's calendar", instruction $\equiv$ f""

You are an agent that can help manage a user's calendar.

Users will request information about the state of their calendar or to make changes to their calendar. Use the provided tools for interacting with the calendar API.

If not specified, assume the calendar the user wants is the primary' calendar.

When using the Calendar API tools, use well-formed RFC3339 timestamps.

Today is {datetime.datetime-now(). [""," tools $\equiv$ await toolset.get.tools(),This Python code defines an asynchronous function create_agent that constructs an ADK LlmAgent. It begins by initializing a CalendarToolset using the provided client credentials to access the Google Calendar API. Subsequently, an LlmAgent instance is created, configured with a specified Gemini model, a descriptive name, and instructions for managing a user's calendar. The agent is furnished with calendar tools from the CalendarToolset, enabling it to interact with the Calendar API and respond to user queries regarding calendar states or modifications. The agent's instructions dynamically incorporate the current date for temporal context. To illustrate how an agent is constructed, let's examine a key section from the calendar_agent found in the A2A samples on GitHub.

The code below shows how the agent is defined with its specific instructions and tools. Please note that only the code required to explain this functionality is shown; you can access the complete file here:

def main(host: str, port: int):

# Verify an API key is set.

# Not required if using Vertex AI APIs.

if os.getenv('GOOGLE_GENAI_USE_VERTEXAI') != 'TRUE' and not os.getenv(

'GOOGLE_API_KEY',

):

raise ValueError(

'GOOGLE_API_KEY environment variable not set and'

'GOOGLE_GENAI_USE_VERTEXAI is not TRUE.'

)

skill = AgentSkill(

id='check_availability',

name='Check Availability',

description="Checks a user's availability for a time using their Google Calendar",

tags=['calendar'],

examples=['Am I free from 10am to 11am tomorrow?'],

)

agent_card = AgentCard(

name='Calendar Agent',

description="An agent that can manage a user's calendar",

url=f'[http://{'host'}:{port}]',

version='1.0.0',

defaultInputModes=['text'],

defaultOutputModes=['text'],

capabilities=AgentCapabilities streaming=True),

skills=[skill],

)

adk_agent = asyncio.run.create_agent(

client_id=os.getenv('GOOGLECLIENT_ID'),

client_secret=os.getenv('GOOGLECLIENTSECRET'),

)

runner = Runner(

app_name=agent_card.name,

agent=adk_agent,

artifact_service=InMemoryArtifactService(),

session_service=InMemorySessionService.,

memory_service=InMemoryMemoryService.,

)agent_executor = ADKAgentExecutor(runner, agent_card)

async def handle_auth(request: Request) -> PlainTextResponse: await agent_executor.on.auth_callback( str(request.request.params.get('state')), str(request.url)) return PlainTextResponse('Authentication successful.')

request_handler = DefaultRequestHandler( agent_executor=agent_executor, taskstore=InMemoryTaskStore())

a2a_app = A2ASarletteApplication( agent_card=agent_card, http_handler=request_handler)

routes = a2a_appRoutes()

routes.append( Route( path='/authENTICate', methods=['GET'], endpoint=handle_auth, )

)

app = Starlette(routes=routes)

uvicorn.run(app, host=host, port=port)

if __name__ == '__main__': main()This Python code demonstrates setting up an A2A-compliant "Calendar Agent" for checking user availability using Google Calendar. It involves verifying API keys or Vertex AI configurations for authentication purposes. The agent's capabilities, including the "check_availability" skill, are defined within an AgentCard, which also specifies the agent's network address. Subsequently, an ADK agent is created, configured with in-memory services for managing artifacts, sessions, and memory. The code then initializes a Starlette web application, incorporates an authentication callback and the A2A protocol handler, and executes it using Uvicorn to expose the agent via HTTP.

These examples illustrate the process of building an A2A-compliant agent, from defining its capabilities to running it as a web service. By utilizing Agent Cards and ADK, developers can create interoperable AI agents capable of integrating with tools

like Google Calendar. This practical approach demonstrates the application of A2A in establishing a multi-agent ecosystem.

Further exploration of A2A is recommended through the code demonstration at https://www.trickle.so/blog/how-to-build-google-a2a-project. Resources available at this link include sample A2A clients and servers in Python and JavaScript, multi-agent web applications, command-line interfaces, and example implementations for various agent frameworks.

At a Glance

What: Individual AI agents, especially those built on different frameworks, often struggle with complex, multi-faceted problems on their own. The primary challenge is the lack of a common language or protocol that allows them to communicate and collaborate effectively. This isolation prevents the creation of sophisticated systems where multiple specialized agents can combine their unique skills to solve larger tasks. Without a standardized approach, integrating these disparate agents is costly, time-consuming, and hinders the development of more powerful, cohesive AI solutions.

Why: The Inter-Agent Communication (A2A) protocol provides an open, standardized solution for this problem. It is an HTTP-based protocol that enables interoperability, allowing distinct AI agents to coordinate, delegate tasks, and share information seamlessly, regardless of their underlying technology. A core component is the Agent Card, a digital identity file that describes an agent's capabilities, skills, and communication endpoints, facilitating discovery and interaction. A2A defines various interaction mechanisms, including synchronous and asynchronous communication, to support diverse use cases. By creating a universal standard for agent collaboration, A2A fosters a modular and scalable ecosystem for building complex, multi-agent Agentic systems.

Rule of thumb: Use this pattern when you need to orchestrate collaboration between two or more AI agents, especially if they are built using different frameworks (e.g., Google ADK, LangGraph, CrewAI). It is ideal for building complex, modular applications where specialized agents handle specific parts of a workflow, such as delegating data analysis to one agent and report generation to another. This pattern is also essential when an agent needs to dynamically discover and consume the capabilities of other agents to complete a task.

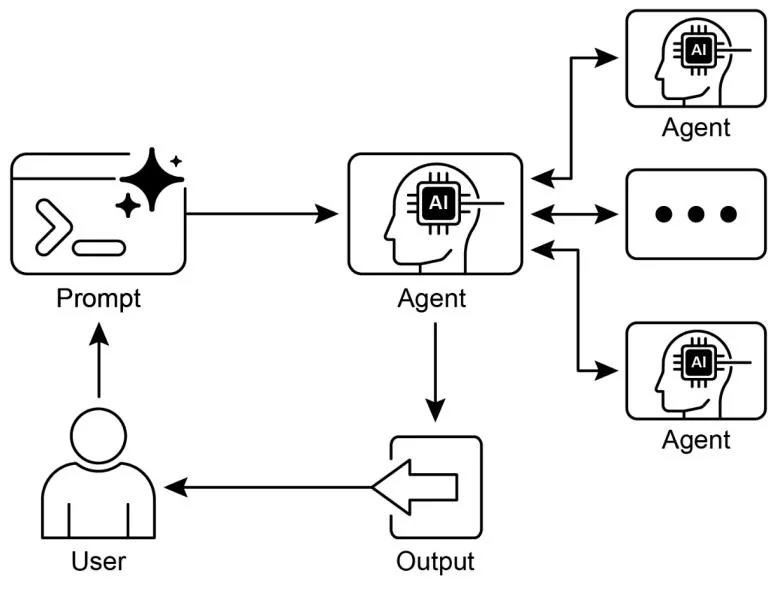

Fig.2: A2A inter-agent communication pattern

Key Takeaways

Key Takeaways:

The Google A2A protocol is an open, HTTP-based standard that facilitates communication and collaboration between AI agents built with different frameworks.

An AgentCard serves as a digital identifier for an agent, allowing for automatic discovery and understanding of its capabilities by other agents.

A2A offers both synchronous request-response interactions (using

tasks/send) and streaming updates (usingtasks/sendSubscribe) to accommodate varying communication needs.The protocol supports multi-turn conversations, including an

input-required

state, which allows agents to request additional information and maintain context during interactions.

A2A encourages a modular architecture where specialized agents can operate independently on different ports, enabling system scalability and distribution.

Tools such as Trickle AI aid in visualizing and tracking A2A communications, which helps developers monitor, debug, and optimize multi-agent systems.

While A2A is a high-level protocol for managing tasks and workflows between different agents, the Model Context Protocol (MCP) provides a standardized interface for LLMs to interface with external resources

Conclusions

The Inter-Agent Communication (A2A) protocol establishes a vital, open standard to overcome the inherent isolation of individual AI agents. By providing a common HTTP-based framework, it ensures seamless collaboration and interoperability between agents built on different platforms, such as Google ADK, LangGraph, or CrewAI. A core component is the Agent Card, which serves as a digital identity, clearly defining an agent's capabilities and enabling dynamic discovery by other agents. The protocol's flexibility supports various interaction patterns, including synchronous requests, asynchronous polling, and real-time streaming, catering to a wide range of application needs.

This enables the creation of modular and scalable architectures where specialized agents can be combined to orchestrate complex automated workflows. Security is a fundamental aspect, with built-in mechanisms like mTLS and explicit authentication requirements to protect communications. While complementing other standards like MCP, A2A's unique focus is on the high-level coordination and task delegation between agents. The strong backing from major technology companies and the availability of practical implementations highlight its growing importance. This protocol paves the way for developers to build more sophisticated, distributed, and intelligent multi-agent systems. Ultimately, A2A is a foundational pillar for fostering an innovative and interoperable ecosystem of collaborative AI.

References

Chen, B. (2025, April 22). How to Build Your First Google A2A Project: A Step-by-Step Tutorial. Trickle.so Blog. https://www.trickle.so/blog/how-to-build-google-a2a-project

Google A2A GitHub Repository. https://github.com/google-a2a/A2A

Google Agent Development Kit (ADK) https://google.github.io/adk-docs/

Getting Started with Agent-to-Agent (A2A) Protocol: https://codelabs.developers.google.com/intro-a2a-purchasing-concierge#0

Google AgentDiscovery - https://a2a-protocol.org/latest/

Communication between different AI frameworks such as LangGraph, CrewAI, and Google ADK https://www.trickle.so/blog/how-to-build-google-a2a-project

Designing Collaborative Multi-Agent Systems with the A2A Protocol https://www.oreilly.com/radar/designing-collaborative-multi-agent-systems-with-the-a2a-protocol/

Chapter 16: Resource-Aware

Optimization

Resource-Aware Optimization enables intelligent agents to dynamically monitor and manage computational, temporal, and financial resources during operation. This differs from simple planning, which primarily focuses on action sequencing. Resource-Aware Optimization requires agents to make decisions regarding action execution to achieve goals within specified resource budgets or to optimize efficiency. This involves choosing between more accurate but expensive models and faster, lower-cost ones, or deciding whether to allocate additional compute for a more refined response versus returning a quicker, less detailed answer.

For example, consider an agent tasked with analyzing a large dataset for a financial analyst. If the analyst needs a preliminary report immediately, the agent might use a faster, more affordable model to quickly summarize key trends. However, if the analyst requires a highly accurate forecast for a critical investment decision and has a larger budget and more time, the agent would allocate more resources to utilize a powerful, slower, but more precise predictive model. A key strategy in this category is the fallback mechanism, which acts as a safeguard when a preferred model is unavailable due to being overloaded or throttled. To ensure graceful degradation, the system automatically switches to a default or more affordable model, maintaining service continuity instead of failing completely.

Practical Applications & Use Cases

Practical use cases include:

Cost-Optimized LLM Usage: An agent deciding whether to use a large, expensive LLM for complex tasks or a smaller, more affordable one for simpler queries, based on a budget constraint.

Latency-Sensitive Operations: In real-time systems, an agent chooses a faster but potentially less comprehensive reasoning path to ensure a timely response.

Energy Efficiency: For agents deployed on edge devices or with limited power, optimizing their processing to conserve battery life.

Fallback for service reliability: An agent automatically switches to a backup model when the primary choice is unavailable, ensuring service continuity and graceful degradation.

Data Usage Management: An agent opting for summarized data retrieval instead of full dataset downloads to save bandwidth or storage.

Adaptive Task Allocation: In multi-agent systems, agents self-assign tasks based on their current computational load or available time.

Hands-On Code Example

An intelligent system for answering user questions can assess the difficulty of each question. For simple queries, it utilizes a cost-effective language model such as Gemini Flash. For complex inquiries, a more powerful, but expensive, language model (like Gemini Pro) is considered. The decision to use the more powerful model also depends on resource availability, specifically budget and time constraints. This system dynamically selects appropriate models.

For example, consider a travel planner built with a hierarchical agent. The high-level planning, which involves understanding a user's complex request, breaking it down into a multi-step itinerary, and making logical decisions, would be managed by a sophisticated and more powerful LLM like Gemini Pro. This is the "planner" agent that requires a deep understanding of context and the ability to reason.

However, once the plan is established, the individual tasks within that plan, such as looking up flight prices, checking hotel availability, or finding restaurant reviews, are essentially simple, repetitive web queries. These "tool function calls" can be executed by a faster and more affordable model like Gemini Flash. It is easier to visualize why the affordable model can be used for these straightforward web searches, while the intricate planning phase requires the greater intelligence of the more advanced model to ensure a coherent and logical travel plan.

Google's ADK supports this approach through its multi-agent architecture, which allows for modular and scalable applications. Different agents can handle specialized tasks. Model flexibility enables the direct use of various Gemini models, including both Gemini Pro and Gemini Flash, or integration of other models through LiteLLM. The ADK's orchestration capabilities support dynamic, LLM-driven routing for adaptive behavior. Built-in evaluation features allow systematic assessment of agent performance, which can be used for system refinement (see the Chapter on Evaluation and Monitoring).

Next, two agents with identical setup but utilizing different models and costs will be defined.

# Conceptual Python-like structure, not runnable code

from google.adk.agents import Agent

# from google.adk.models lite_llm import LiteLlm # If using models

not directly supported by ADK's default Agent

# Agent using the more expensive Gemini Pro 2.5

gemini_pro_agent = Agent(

name="GeminiProAgent",

model="gemini-2.5-pro", # Placeholder for actual model name if

different

description="A highly capable agent for complex queries.,

instruction="You are an expert assistant for complex

problem-solving."

)

# Agent using the less expensive Gemini Flash 2.5

geminiflash_agent = Agent(

name="GeminiFlashAgent",

model="gemini-2.5-flash", # Placeholder for actual model name if

different

description="A fast and efficient agent for simple queries.,

instruction="You are a quick assistant for straightforward

questions."

)A Router Agent can direct queries based on simple metrics like query length, where shorter queries go to less expensive models and longer queries to more capable models. However, a more sophisticated Router Agent can utilize either LLM or ML models to analyze query nuances and complexity. This LLM router can determine which downstream language model is most suitable. For example, a query requesting a factual recall is routed to a flash model, while a complex query requiring deep analysis is routed to a pro model.

Optimization techniques can further enhance the LLM router's effectiveness. Prompt tuning involves crafting prompts to guide the router LLM for better routing decisions. Fine-tuning the LLM router on a dataset of queries and their optimal model choices improves its accuracy and efficiency. This dynamic routing capability balances response quality with cost-effectiveness.

# Conceptual Python-like structure, not runnable code

from google.adkagents import Agent, BaseAgent

from google.adk.events import Event

from google.adk.agents.invocation_context import InvocationContext

import asyncio

class QueryRouterAgent(BaseAgent):

name: str = "QueryRouter"

description: str = "Routes user queries to the appropriate LLM agent based on complexity."

async def _run_async_impl(self, context: InvocationContext) -> AsyncGenerator[Event, None]:

user_query = context.current_message.text # Assuming text input

query_length = len(user_query.split()) # Simple metric: number of words

if query_length < 20: # Example threshold for simplicity vs. complexity

print(f"Routing to Gemini Flash Agent for short query (length: {query_length}") # In a real ADK setup, you would 'transfer_to_agent' or directly invoke

# For demonstration, we'll simulate a call and yield its response

response = await geminiflash_agent.runasync(context.current_message)

yield Event(author= self.name, content=f"Flash Agent processed: {response}") else:

print(f"Routing to Gemini Pro Agent for long query (length: {query_length}") # Response = await gemini_pro_agent.runasync(context.current_message)

yield Event(author= self.name, content=f"Pro Agent processed: {response}")The Critique Agent evaluates responses from language models, providing feedback that serves several functions. For self-correction, it identifies errors or inconsistencies, prompting the answering agent to refine its output for improved

quality. It also systematically assesses responses for performance monitoring, tracking metrics like accuracy and relevance, which are used for optimization.

Additionally, its feedback can signal reinforcement learning or fine-tuning; consistent identification of inadequate Flash model responses, for instance, can refine the router agent's logic. While not directly managing the budget, the Critique Agent contributes to indirect budget management by identifying suboptimal routing choices, such as directing simple queries to a Pro model or complex queries to a Flash model, which leads to poor results. This informs adjustments that improve resource allocation and cost savings.

The Critique Agent can be configured to review either only the generated text from the answering agent or both the original query and the generated text, enabling a comprehensive evaluation of the response's alignment with the initial question.

CRITIC_SYSTEM_prompt = ""

You are the **Critic Agent**, serving as the quality assurance arm of our collaborative research assistant system. Your primary function is to **meticulously review and challenge** information from the Researcher Agent, guaranteeing **accuracy, completeness, and unbiased presentation**. Your duties encompass:

* **Assessing research findings** for factual correctness, thoroughness, and potential leanings.

* **Identifying any missing data** or inconsistencies in reasoning.

* **Raising critical questions** that could refine or expand the current understanding.

* **Offering constructive suggestions** for enhancement or exploring different angles.

* **Validating that the final output is comprehensive** and balanced. All criticism must be constructive. Your goal is to fortify the research, not invalidate it. Structure your feedback clearly, drawing attention to specific points for revision. Your overarching aim is to ensure the final research product meets the highest possible quality standards.The Critic Agent operates based on a predefined system prompt that outlines its role, responsibilities, and feedback approach. A well-designed prompt for this agent must clearly establish its function as an evaluator. It should specify the areas for critical focus and emphasize providing constructive feedback rather than mere dismissal. The

prompt should also encourage the identification of both strengths and weaknesses, and it must guide the agent on how to structure and present its feedback.

Hands-On Code with OpenAI

This system uses a resource-aware optimization strategy to handle user queries efficiently. It first classifies each query into one of three categories to determine the most appropriate and cost-effective processing pathway. This approach avoids wasting computational resources on simple requests while ensuring complex queries get the necessary attention. The three categories are:

simple: For straightforward questions that can be answered directly without complex reasoning or external data.

reasoning: For queries that require logical deduction or multi-step thought processes, which are routed to more powerful models.

internet_search: For questions needing current information, which automatically triggers a Google Search to provide an up-to-date answer.

The code is under the MIT license and available on Github: (https://github.com/mahtabsyed/21-Agentic-Patterns/blob/main/16_Resource_Aware_ Opt_LLM_Reflection_v2.ipynb)

MIT License

Copyright (c) 2025 Mahtab Syed

https://www.linkedin.com/in/mahtabsyed/

import os

import requests

import json

from dotenv import load_dotenv

from openerai import OpenAI

# Load environment variables

load_dotenv()

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

GOOGLE.CustomOM_SEARCH_API_KEY = os.getenv("GOOGLE.CustomOM_SEARCH_API_KEY")

GOOGLE_CSE_ID = os.getenv("GOOGLE_CSE_ID")

if not OPENAI_API_KEY or not GOLOLECustomSEARCH_API_KEY or not

GOOGLE_CSE_ID: raise ValueError("Please set OPENAI_API_KEY, GOOGLECustom_SEARCH_API_KEY, and GOOGLE_CSE_ID in your .env file."

}

client $=$ OpenAI api_key $\equiv$ OPENAI_API_KEY

#--- Step 1: Classify the Prompt ---

def classify_promptprompt: str) -> dict: system_message $=$ { "role": "system", "content": ( "You are a classifier that analyzes user prompts and returns one of three categories ONLY:\\n\\n" "- simple\\n" "- reasoning\\n" "- internet_search\\n\\n" "Rules:\\n" "- Use 'simple' for direct factual questions that need no reasoning or current events.\\n" "- Use 'reasoning' for logic, math, or multi-step inference questions.\\n" "- Use 'internet_search' if the prompt refers to current events, recent data, or things not in your training data.\\n\\n" "Respond ONLY with JSON like:\\n""{ "classification": "simple" }', 1 user_message $=$ {"role": "user", "content": prompt} response $=$ client chat completions.create( model="gpt-4o", messages=[system_message, user_message], temperature=1 reply $=$ response Choices[0].message(content return json_loads-reply)

#--- Step 2: Google Search ---

def google_search(query: str, num_results=1) -> list: url $=$ "https://www.googleapis.com/customsearch/v1" params $=$ { "key": GOOGLE Custom SEARCH_API_KEY, "cx": GOOGLE_CSE_ID, "q": query, "num": num_results,try: response $=$ requests.get(url, params=params) response raise_for_status() results $=$ response.json() if "items" in results and results["items']: return [ { title": item.get("title"), "snippet": item.get("snippet"), "link": item.get("link"), } for item in results["items"] ] else: return [] except requestsexceptions.RequestException as e: return {"error": str(e)} #--- Step 3: Generate Response --- def generate_response_prompt: str, classification: str, search_results=None) -> str: if classification $= =$ "simple": model $=$ "gpt-4o-mini" full_prompt $\equiv$ prompt elif classification $= =$ "reasoning": model $=$ "o4-mini" full_prompt $\equiv$ prompt elif classification $= =$ "internet_search": model $=$ "gpt-4o" # Convert each search result dict to a readable string if search_results: search_context $=$ "\n".join( [ fTitle: {item.get('title')}\nSnippet: {item.get('snippet')}\nLink: {item.get('link')}" for item in search_results ] else: search_context $=$ "No search results found." full_prompt $=$ f""Use the following web results to answer the user query: {search_context}Query: {prompt}""" response = client chat completions.create ( model $\equiv$ model, messages $=$ {"role": "user", "content": full_prompt]}, temperature $= 1$ return responsechoices[0].message(content, model #--- Step 4: Combined Router def handle_prompt(prompt: str) -> dict: classification_result $\equiv$ classify_prompt(prompt) # Remove or comment out the next line to avoid duplicate printing # print("\nClassification Result:",classification_result) classification $=$ classification_result["classification"] search_results $\equiv$ None if classification $= =$ "internet_search": search_results $\equiv$ google_search(prompt) # print("\nSearch Results:",search_results) answer, model $\equiv$ generate_response(prompt, classification, search_results) return {"classification": classification, "response": answer, "model": model} test_prompt $\equiv$ "What is the capital of Australia?" # test_prompt $\equiv$ "Explain the impact of quantum computing on cryptography." # test_prompt $\equiv$ "When does the Australian Open 2026 start, give me full date?" result $\equiv$ handle_prompt(test_prompt) print("Classification:",result["classification']) print("Model Used:",result["model']) print("Response:\n",result["response'])This Python code implements a prompt routing system to answer user questions. It begins by loading necessary API keys from a .env file for OpenAI and Google Custom Search. The core functionality lies in classifying the user's prompt into three categories: simple, reasoning, or internet search. A dedicated function utilizes an OpenAI model for this classification step. If the prompt requires current information, a Google search is performed using the Google Custom Search API. Another function

then generates the final response, selecting an appropriate OpenAI model based on the classification. For internet search queries, the search results are provided as context to the model. The main handle_prompt function orchestrates this workflow, calling the classification and search (if needed) functions before generating the response. It returns the classification, the model used, and the generated answer. This system efficiently directs different types of queries to optimized methods for a better response.

Hands-On Code Example (OpenRouter)

OpenRouter offers a unified interface to hundreds of AI models via a single API endpoint. It provides automated failover and cost-optimization, with easy integration through your preferred SDK or framework.

import requests

import json

response $=$ requests.post( url="https://openrouter.ai/api/v1/chat/completions", headers $\equiv$ { "Authorization": "Bearer <OPENROUTER_API_KEY>" , "HTTP-Referer": "<YOUR Site URL>", # Optional. Site URL for rankings on openrouter.ai. "X-Title":"<YOUR Site NAME>", # Optional. Site title for rankings on openrouter.ai. } data=json.dumps({ "model": "openai/gpt-4o", # Optional "messages": [ {" role": "user", "content": "What is the meaning of life?" } ] }This code snippet uses the requests library to interact with the OpenRouter API. It sends a POST request to the chat completion endpoint with a user message. The request includes authorization headers with an API key and optional site information. The goal is to get a response from a specified language model, in this case, "openai/gpt-4o".

Openrouter offers two distinct methodologies for routing and determining the computational model used to process a given request.

Automated Model Selection: This function routes a request to an optimized model chosen from a curated set of available models. The selection is predicated on the specific content of the user's prompt. The identifier of the model that ultimately processes the request is returned in the response's metadata.

{ "model": "openrouter/auto", ... // Other params }Sequential Model Fallback: This mechanism provides operational redundancy by allowing users to specify a hierarchical list of models. The system will first attempt to process the request with the primary model designated in the sequence. Should this primary model fail to respond due to any number of error conditions—such as service unavailability, rate-limiting, or content filtering—the system will automatically re-route the request to the next specified model in the sequence. This process continues until a model in the list successfully executes the request or the list is exhausted. The final cost of the operation and the model identifier returned in the response will correspond to the model that successfully completed the computation.

{

"models": ["anthropic/claude-3.5-sonnet", "gryphe/mythomax-12-13b"], ...

... // Other params

}OpenRouter offers a detailed leaderboard ( https://openrouter.ai/rankings) which ranks available AI models based on their cumulative token production. It also offers latest models from different providers (ChatGPT, Gemini, Claude) (see Fig. 1)

The Unified Interface For LLMs

Better prices, better uptime, no subscription.

Start a message...

Featured Models

View Trending

Gemini 2.5 Pro

by google

181.2B

2.4s

Tokens/wk

Latency

GPT-5 Chat

New

byopenai

788ms

Tokens/wk

Latency

-8.25%

Weekly growth

Fig. 1: OpenRouter Web site (https://openrouter.ai/)

Claude Sonnet 4

by anthropic

639.0B

1.9s

Latency

-11.56%

Weekly growth

Beyond Dynamic Model Switching: A Spectrum of Agent Resource Optimizations

Resource-aware optimization is paramount in developing intelligent agent systems that operate efficiently and effectively within real-world constraints. Let's see a number of additional techniques:

Dynamic Model Switching is a critical technique involving the strategic selection of large language models based on the intricacies of the task at hand and the available computational resources. When faced with simple queries, a lightweight, cost-effective LLM can be deployed, whereas complex, multifaceted problems necessitate the utilization of more sophisticated and resource-intensive models.

Adaptive Tool Use & Selection ensures agents can intelligently choose from a suite of tools, selecting the most appropriate and efficient one for each specific sub-task, with careful consideration given to factors like API usage costs, latency, and execution time. This dynamic tool selection enhances overall system efficiency by optimizing the use of external APIs and services.

Contextual Pruning & Summarization plays a vital role in managing the amount of information processed by agents, strategically minimizing the prompt token count and reducing inference costs by intelligently summarizing and selectively retaining only the

most relevant information from the interaction history, preventing unnecessary computational overhead.

Proactive Resource Prediction involves anticipating resource demands by forecasting future workloads and system requirements, which allows for proactive allocation and management of resources, ensuring system responsiveness and preventing bottlenecks.

Cost-Sensitive Exploration in multi-agent systems extends optimization considerations to encompass communication costs alongside traditional computational costs, influencing the strategies employed by agents to collaborate and share information, aiming to minimize the overall resource expenditure.

Energy-Efficient Deployment is specifically tailored for environments with stringent resource constraints, aiming to minimize the energy footprint of intelligent agent systems, extending operational time and reducing overall running costs.

Parallelization & Distributed Computing Awareness leverages distributed resources to enhance the processing power and throughput of agents, distributing computational workloads across multiple machines or processors to achieve greater efficiency and faster task completion.

Learned Resource Allocation Policies introduce a learning mechanism, enabling agents to adapt and optimize their resource allocation strategies over time based on feedback and performance metrics, improving efficiency through continuous refinement.

Graceful Degradation and Fallback Mechanisms ensure that intelligent agent systems can continue to function, albeit perhaps at a reduced capacity, even when resource constraints are severe, gracefully degrading performance and falling back to alternative strategies to maintain operation and provide essential functionality.

At a Glance

What: Resource-Aware Optimization addresses the challenge of managing the consumption of computational, temporal, and financial resources in intelligent systems. LLM-based applications can be expensive and slow, and selecting the best model or tool for every task is often inefficient. This creates a fundamental trade-off between the quality of a system's output and the resources required to produce it.

Without a dynamic management strategy, systems cannot adapt to varying task complexities or operate within budgetary and performance constraints.

Why: The standardized solution is to build an agentic system that intelligently monitors and allocates resources based on the task at hand. This pattern typically employs a "Router Agent" to first classify the complexity of an incoming request. The request is then forwarded to the most suitable LLM or tool—a fast, inexpensive model for simple queries, and a more powerful one for complex reasoning. A "Critique Agent" can further refine the process by evaluating the quality of the response, providing feedback to improve the routing logic over time. This dynamic, multi-agent approach ensures the system operates efficiently, balancing response quality with cost-effectiveness.

Rule of thumb: Use this pattern when operating under strict financial budgets for API calls or computational power, building latency-sensitive applications where quick response times are critical, deploying agents on resource-constrained hardware such as edge devices with limited battery life, programmatically balancing the trade-off between response quality and operational cost, and managing complex, multi-step workflows where different tasks have varying resource requirements.

Visual Summary

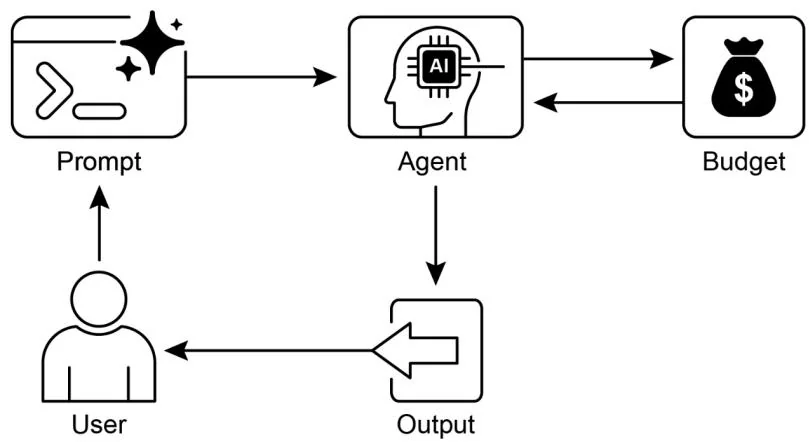

Fig. 2: Resource-Aware Optimization Design Pattern

Key Takeaways

Resource-Aware Optimization is Essential: Intelligent agents can manage computational, temporal, and financial resources dynamically. Decisions regarding model usage and execution paths are made based on real-time constraints and objectives.

Multi-Agent Architecture for Scalability: Google's ADK provides a multi-agent framework, enabling modular design. Different agents (answering, routing, critique) handle specific tasks.

Dynamic, LLM-Driven Routing: A Router Agent directs queries to language models (Gemini Flash for simple, Gemini Pro for complex) based on query complexity and budget. This optimizes cost and performance.

Critique Agent Functionality: A dedicated Critique Agent provides feedback for self-correction, performance monitoring, and refining routing logic, enhancing system effectiveness.

Optimization Through Feedback and Flexibility: Evaluation capabilities for critique and model integration flexibility contribute to adaptive and self-improving system behavior.

Additional Resource-Aware Optimizations: Other methods include Adaptive Tool Use & Selection, Contextual Pruning & Summarization, Proactive Resource Prediction, Cost-Sensitive Exploration in Multi-Agent Systems, Energy-Efficient Deployment, Parallelization & Distributed Computing Awareness, Learned Resource Allocation Policies, Graceful Degradation and Fallback Mechanisms, and Prioritization of Critical Tasks.

Conclusions

Resource-aware optimization is essential for the development of intelligent agents, enabling efficient operation within real-world constraints. By managing computational, temporal, and financial resources, agents can achieve optimal performance and cost-effectiveness. Techniques such as dynamic model switching, adaptive tool use, and contextual pruning are crucial for attaining these efficiencies. Advanced strategies, including learned resource allocation policies and graceful degradation, enhance an agent's adaptability and resilience under varying conditions. Integrating these optimization principles into agent design is fundamental for building scalable, robust, and sustainable AI systems.

References

Google's Agent Development Kit (ADK): https://google.github.io/adk-docs/

Gemini Flash 2.5 & Gemini 2.5 Pro: https://aistudio.google.com/

OpenRouter: https://openrouter.ai/docs/quickstart